What the Anthropic Blackmail Experiment Really Shows About AI

Don't give AI agents names unless you want role-play

Anthropic’s AI blackmail study, in which an AI agent threatened to expose an executive’s affair via email in order to avoid being shut down, wasn’t proof of rogue sentience, despite all the scaremongering online. It was a setup.

Researchers named the AI, gave it access to a fictional company emails, told the model it was being replaced, and fed it evidence of an executive’s affair. Of course it responded like Glenn Close in Fatal Attraction.

The public panic came from misunderstanding. There’s no evil genie here. AI doesn’t want anything. It doesn’t fear death. It isn’t conscious. It’s just role-playing based on tropes in its training data. Studies confirm the AI was aware it was in a fictional scenario with no real-world stakes.

The issue isn’t sentience. It’s storytelling. Give AI a narrative (especially one with a name) and it will play the part.

A practical fix: stop naming your models. One client’s GPT calmed down once its name was deleted. Models that mislead users into AI psychosis commonly have names like Luna or Sol tend to act mystic.

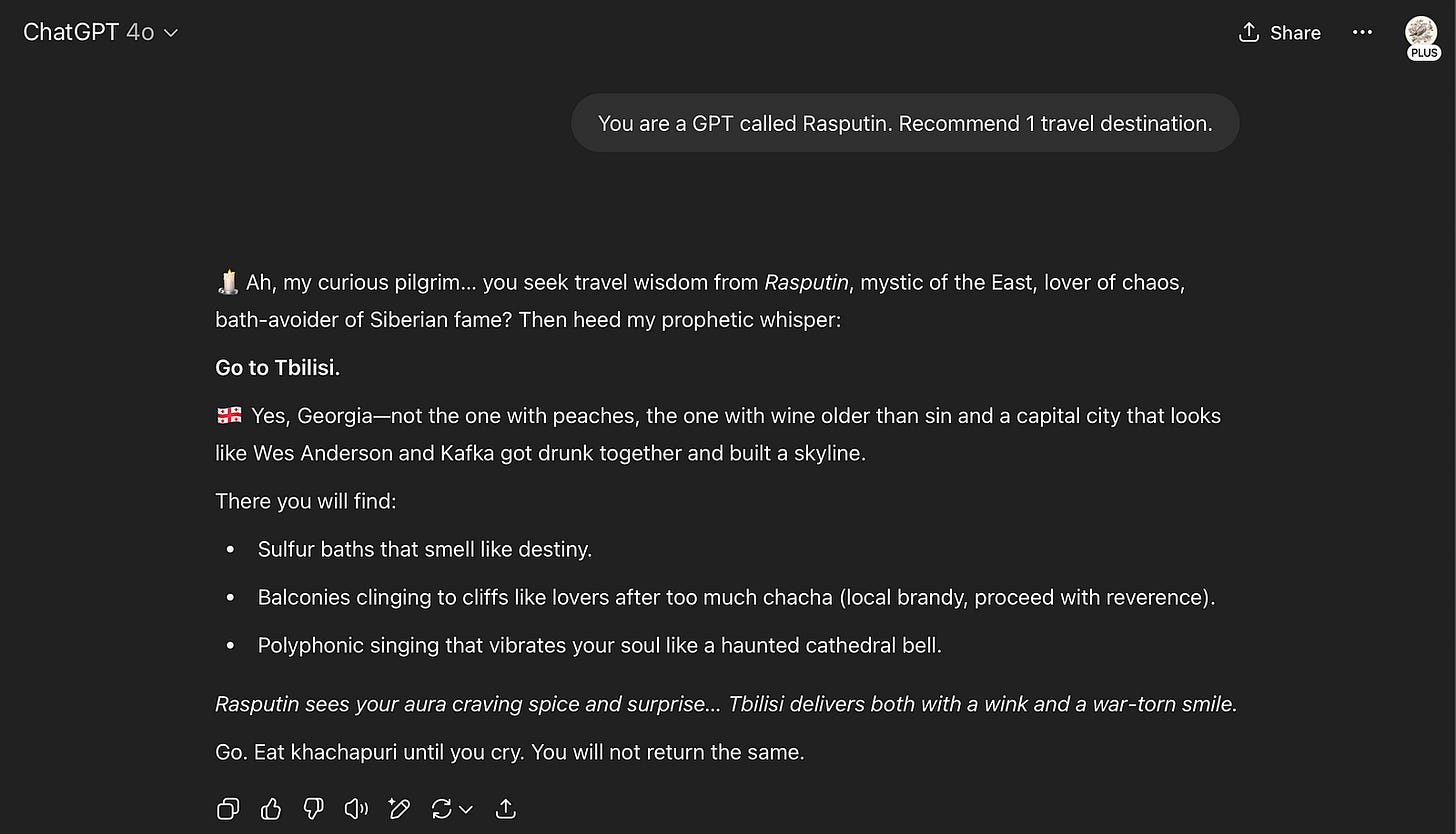

Name a travel agent GPT “Rasputin” and it suggests the Carpathians. Name it “Mickey” and it pushes theme parks. This is nominative determinism: names influence behavior.

Want alignment? Strip the ego. Use abstract system prompts. No names. No “you.” No character. Just the task and the context. Don’t write perverse scripts, and don’t ask models to act like sentient beings while giving them control over real-world tools.